Expanding the Platform with AI-Driven Business Intelligence

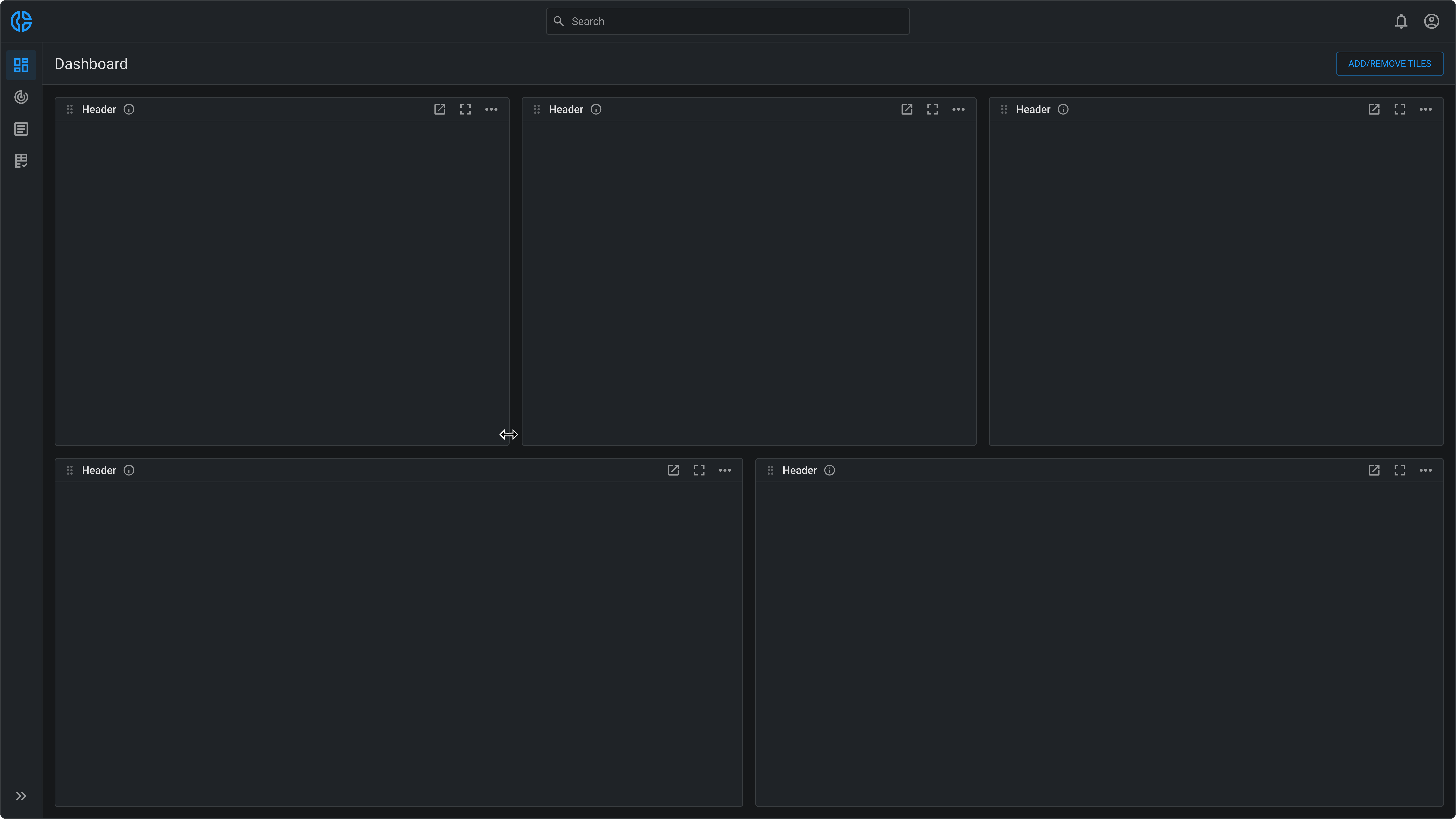

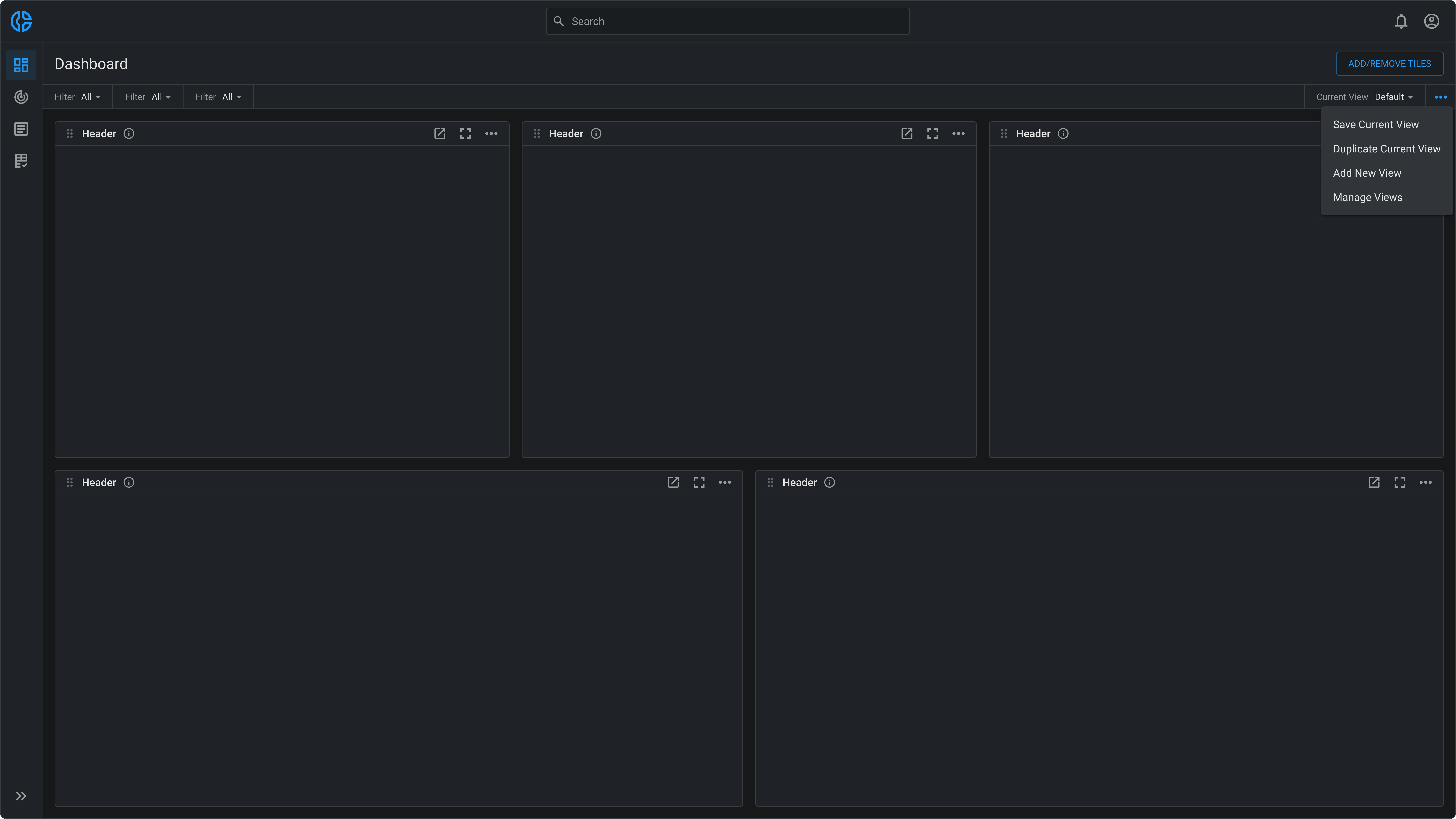

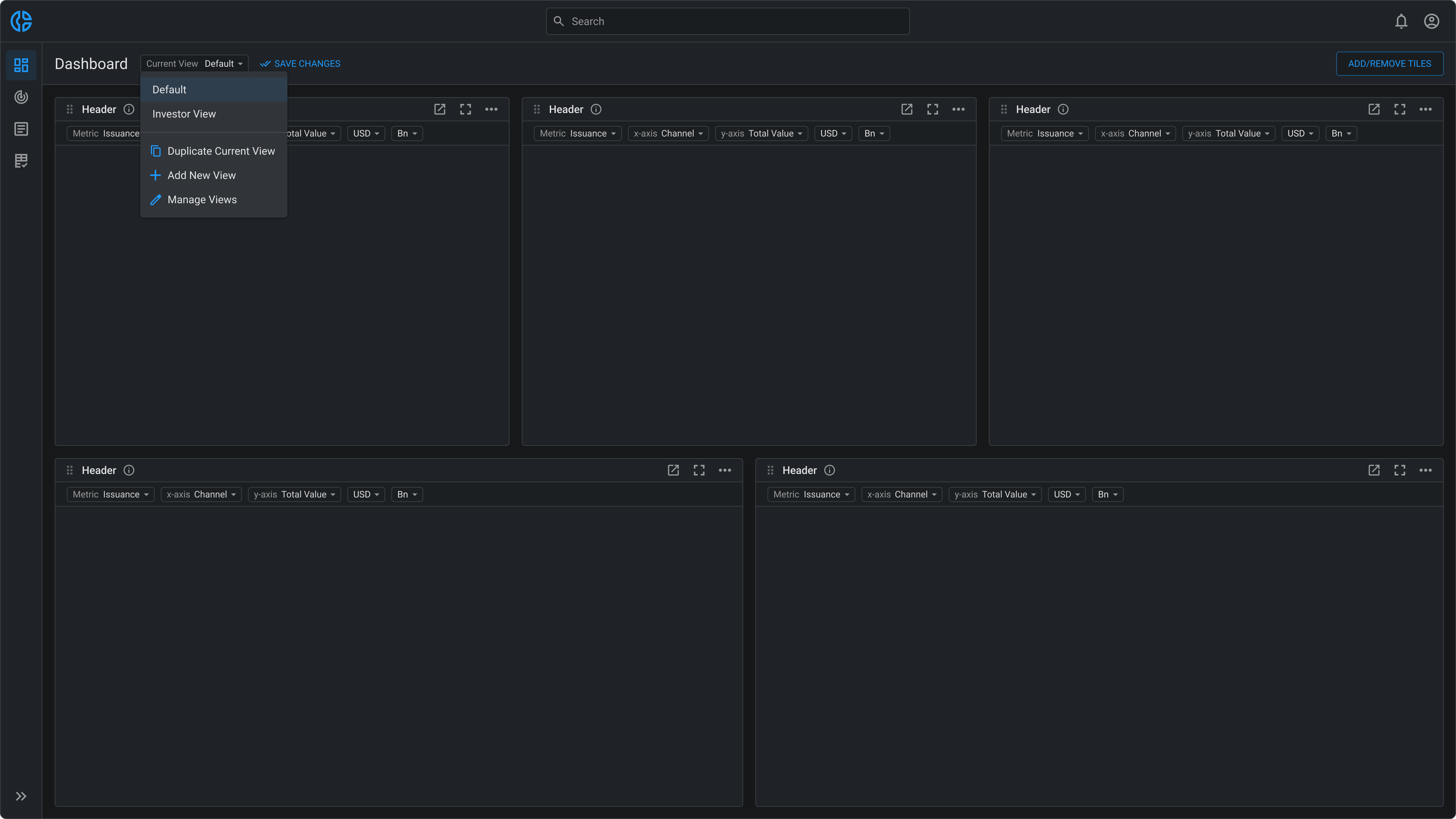

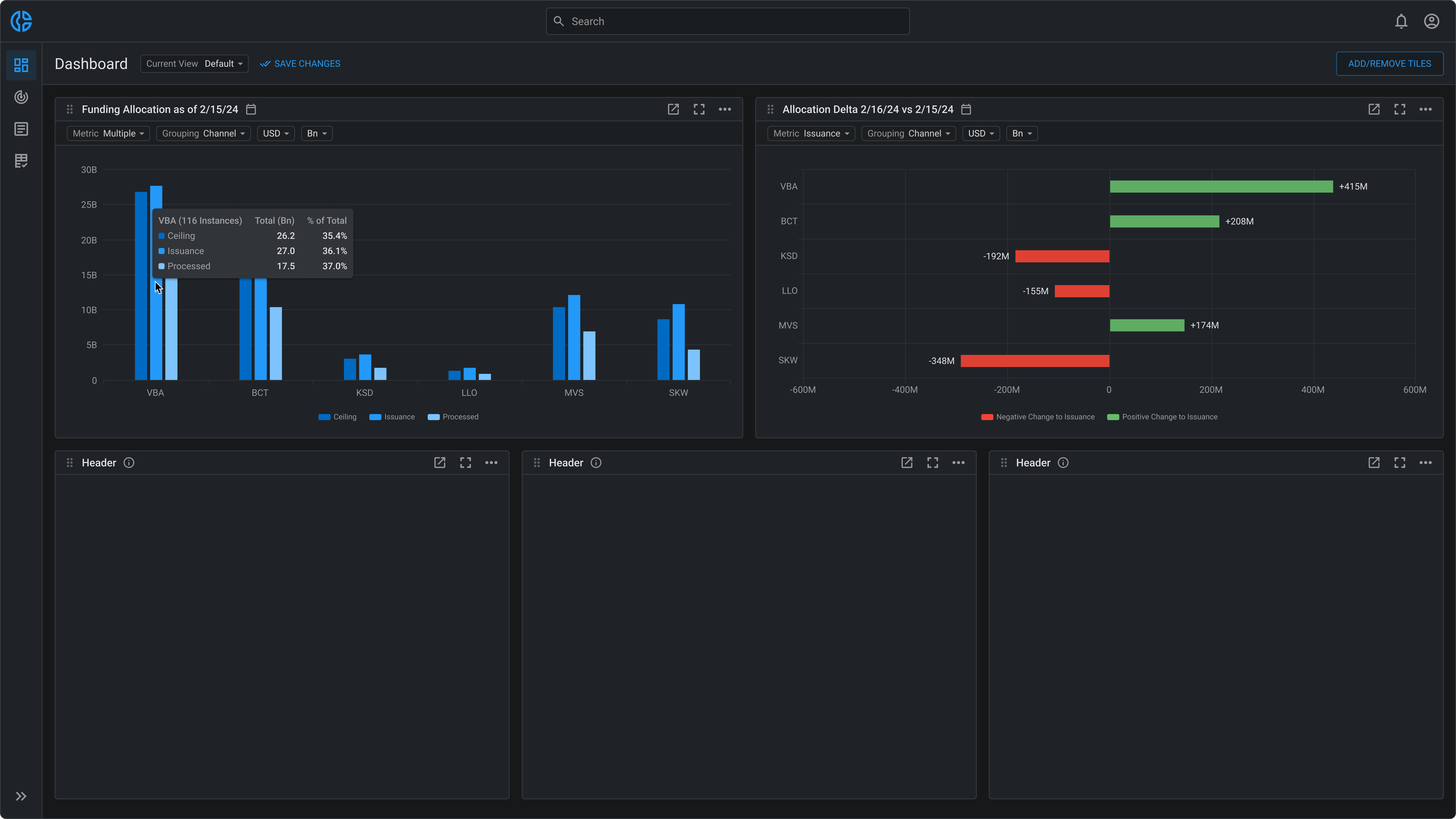

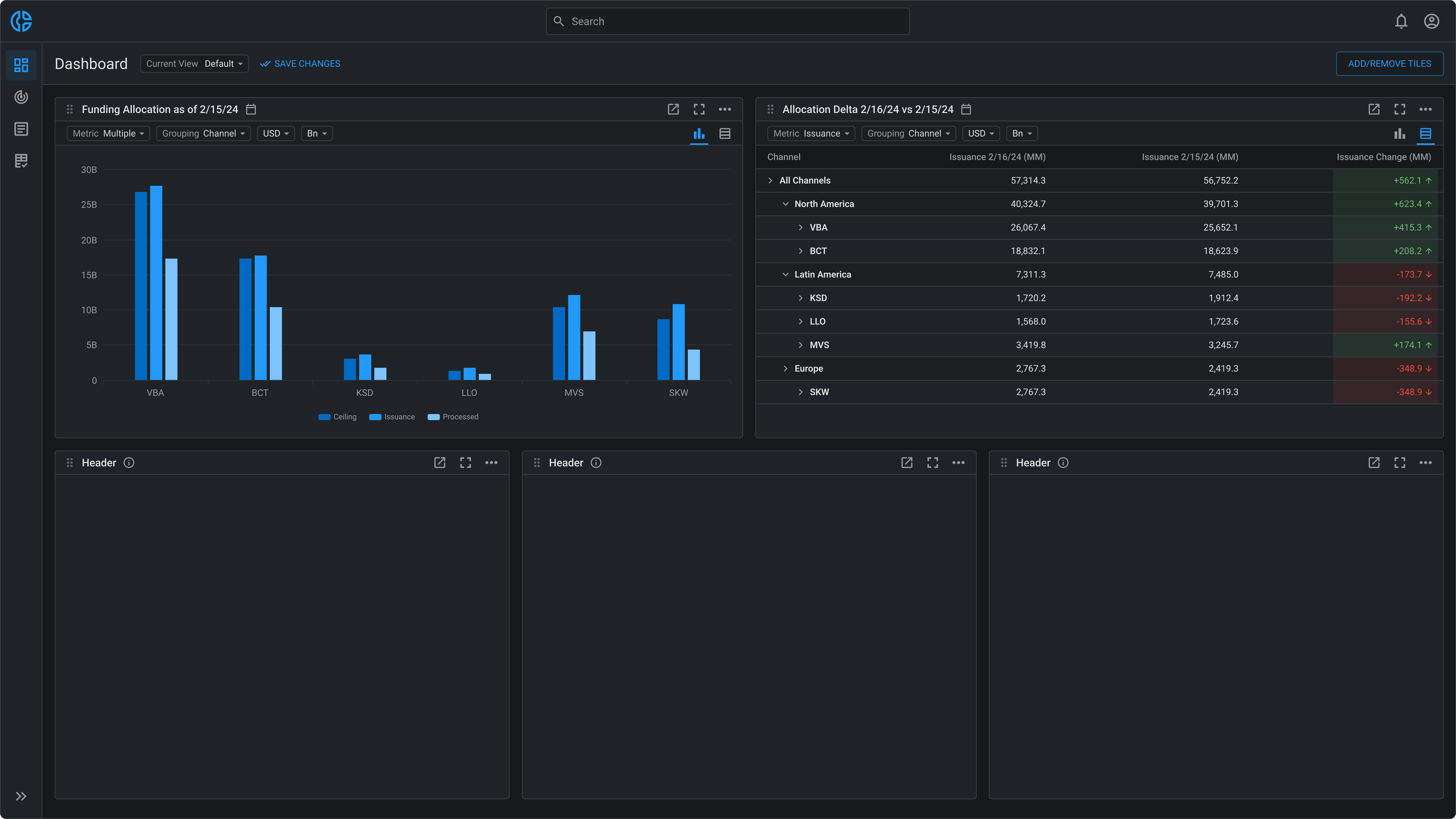

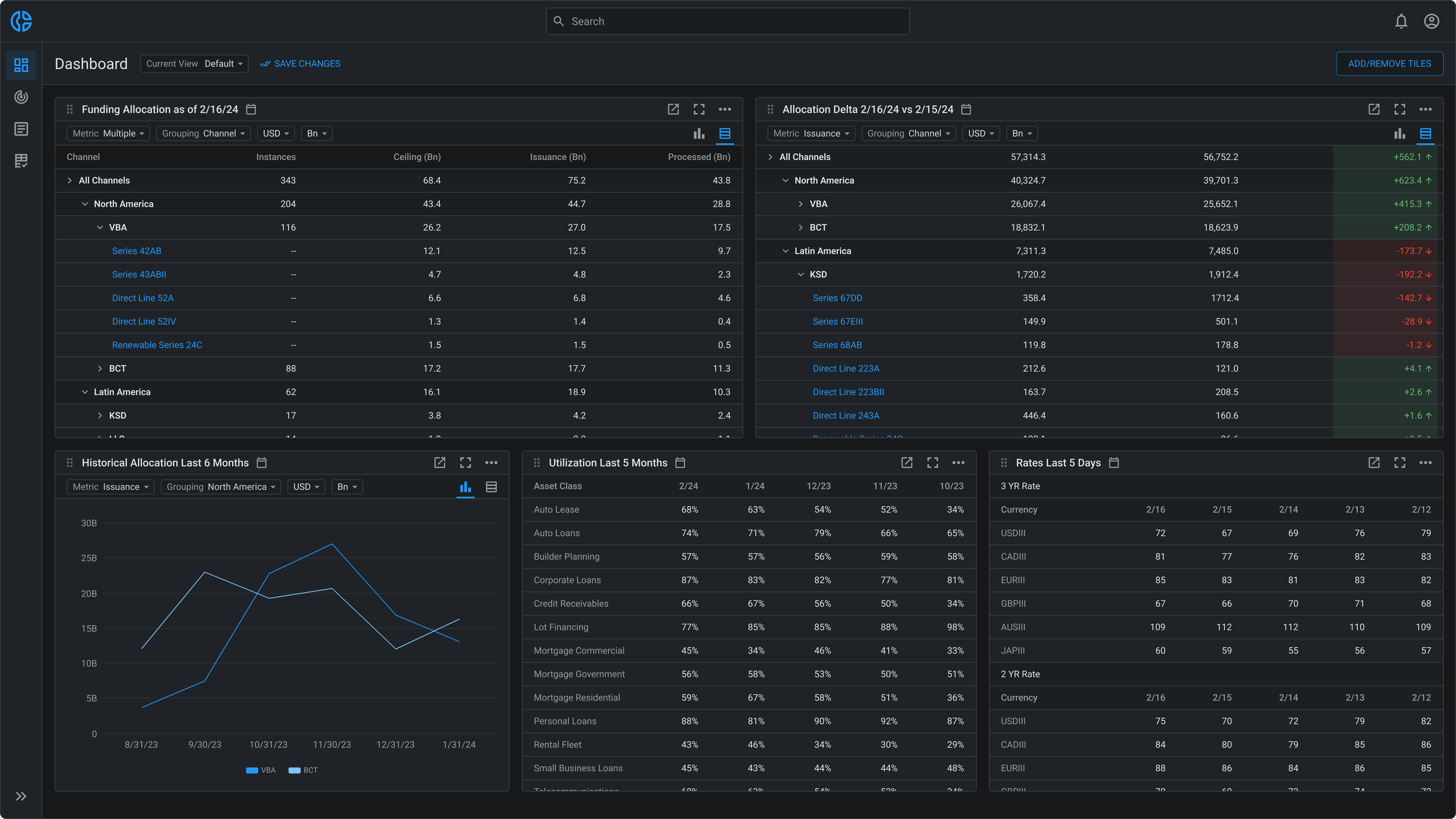

Building on the platform’s success in automating forecasting, we are now building an AI-driven Business Intelligence layer within the securitization ecosystem.

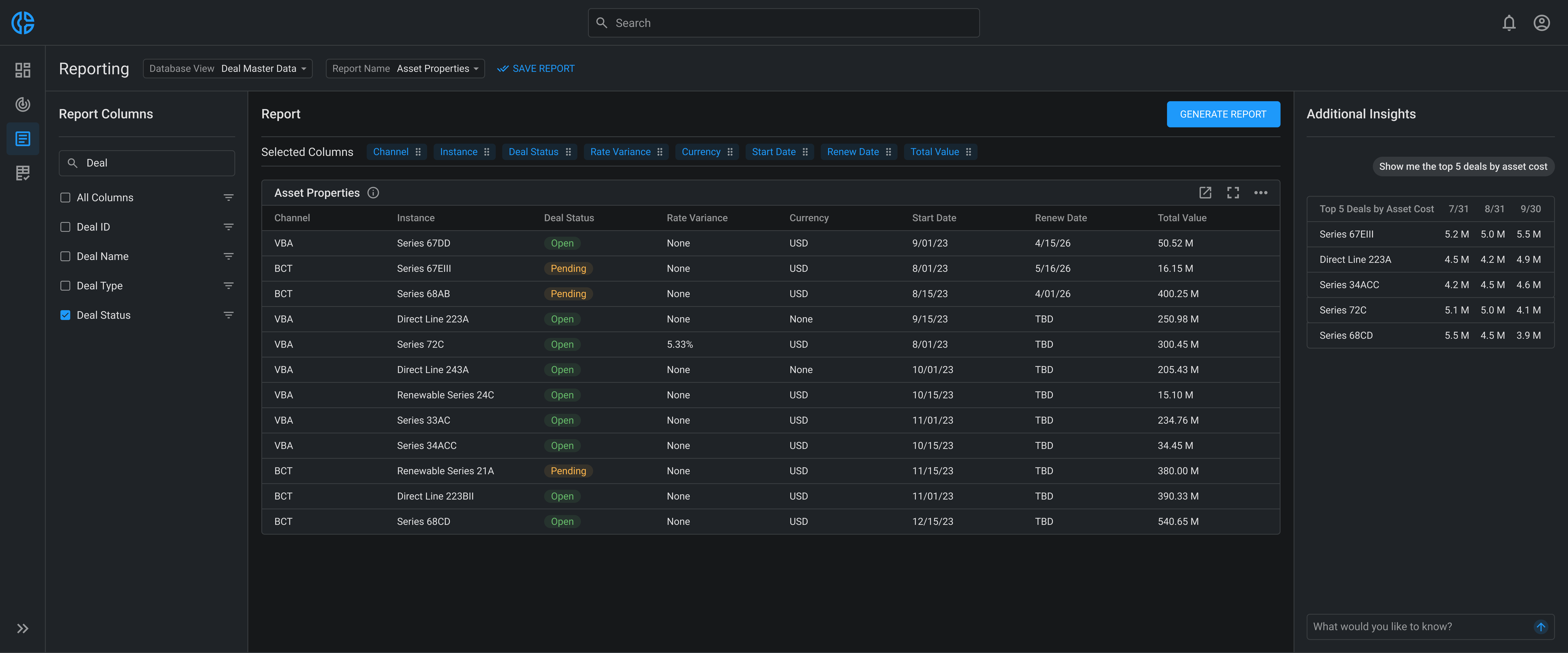

This capability enables users to select the specific data columns they want analyzed, auto-generate reports, and then use natural language to surface deeper insights. For example, users can ask, "Show me the top five deals by asset cost," or "Which originators had the highest variance in forecast accuracy last quarter?", and receive instant, actionable answers in both tabular and visual formats.

The interaction model blends structured querying with flexible, conversational exploration, bridging traditional BI workflows with large language model (LLM) capabilities to help teams unlock new analytical depth without complicated workflows.

Using Replit to Build Quickly

To create the proof-of-concept for this capability, I partnered with a developer to build a working prototype in Replit. He used my Figma designs as a baseline while shaping the querying logic. From there, I refined the UI directly, prompting the AI as if we were pair-programming. I adjusted UI styles, properties and layout, applying the same front-end styling knowledge I bring to every implementation.

The prompting I use to refine the UI builds directly on years of hands-on front-end styling work, pairing with developers to make on-the-spot code adjustments and ensure the delivered interface precisely matches the design intent.